Estimated Reading Time: 15 minutes

In regression problems, we often model the conditional mean of a conditional probability distribution by minimizing the least squares (-loss) between the input and target variables (this can be shown through a brief derivation using the calculus of variations). For more complicated multimodal distributions, however, using merely the conditional mean of a univariate Gaussian can prove to be problematic.

In this blog post, we show how a neural network can represent more general distributions (in our case, we fit a Gaussian mixture model) and then show how one might implement such a network in code. Such models are known as mixture density networks, and we will see how to model the errors and corresponding outputs.

Motivation

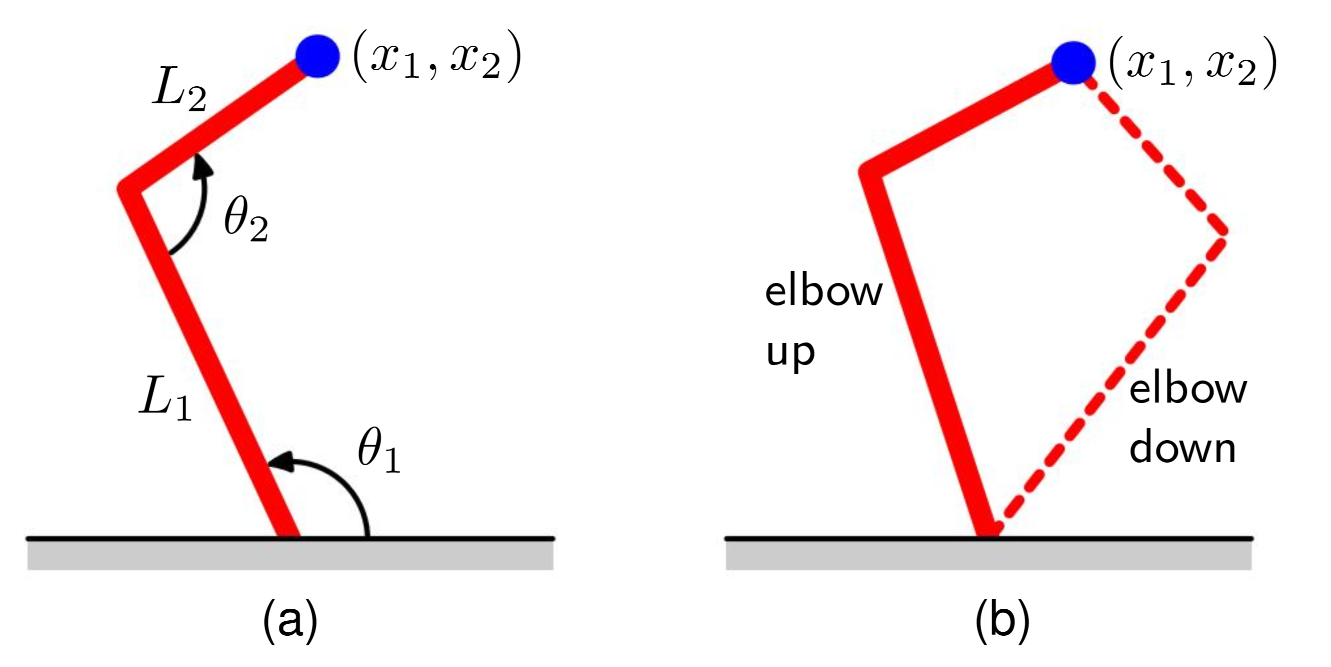

For simple regression problems, we can model the conditional distribution using a Gaussian. This can lead to disastrous results however, for outputs with non-Gaussian distributions. This can be seen, for example, in inverse problems. For a simple example, consider the example of the inverse problem in robot kinematics shown below:

The forward problem involves finding the end-effector position of a two-link robot arm that has arm lengths and , is tilted at an angle from the ground, and has an inter-arm angle of . The forward problem, given the set of parameters, has a unique solution. However, the inverse problem of finding the corresponding angles and lengths has multiple solutions (and thus multiple modes) as shown in the second image. Similarly, other inverse problems are readily seen to be multimodal.

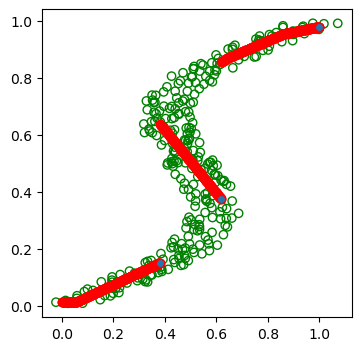

For example, consider a data set of elements in generated as follows: sample a set of values uniformly from the interval and define the corresponding target values where is some uniform noise in the range to .

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

from torch.utils.data import Dataset, DataLoader

from tqdm import tqdm

import torch.nn.functional as F

N = 300

rng = np.random.default_rng(19)

x = rng.random(N)

y = x + 0.3*np.sin(2*np.pi*x) + (0.2*rng.random(x.size) - 0.1)

plt.scatter(x, y, edgecolors = 'g', facecolors='none')

class train_dataset(Dataset):

def __init__(self, x , y):

self.x = torch.from_numpy(x).type(torch.FloatTensor)

self.y = torch.from_numpy(y).type(torch.FloatTensor)

def __len__(self):

return len(self.x)

def __getitem__(self, idx):

return self.x[idx].unsqueeze(0), self.y[idx].unsqueeze(0)

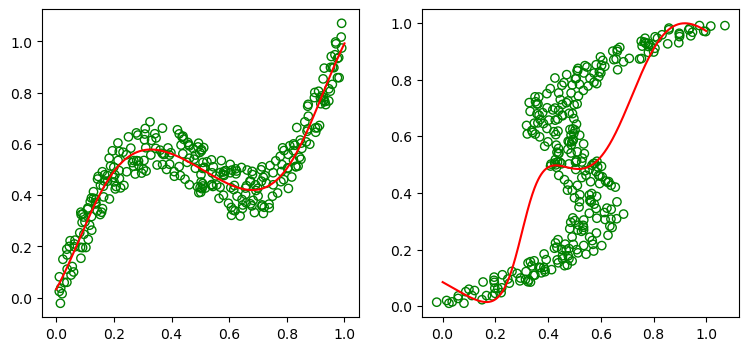

The forward regression problem of mapping a function can readily be solved by doing maximum-likelihood under a Gaussian distribution using the standard L2-loss (say, by fitting a neural network or defining suitable basis functions and then doing linear regression). The inverse problem, however, of finding a mapping leads to very poor results. We show this by fitting a neural network with two hidden layers (with 6 hidden units per hidden layer) to both problems and minimizing the L2-loss. Note we use the softplus activation due to the limited number of units and layers (we wish to fit a "smooth" function like a polynomial. Using ReLUs can work too but they tend to give "harder" functions due to the limited number of connections in our chosen model).

device = 'cuda' if torch.cuda.is_available() else 'cpu'

class NeuralNet(nn.Module):

'''

Simple Two Layer Network with 6 hidden units.

'''

def __init__(self):

super().__init__()

self.stack = nn.Sequential(

nn.Linear(1, 6),

nn.Sigmoid(),

nn.Linear(6,6),

nn.Softplus(),

nn.Linear(6, 1)

)

def forward(self, x):

out = self.stack(x)

return out

forward_model = NeuralNet().to(device)

inverse_model = NeuralNet().to(device)

#hyperparameters

learning_rate_forward = 0.1

learning_rate_inverse = 0.05

batch_size = N

epochs = 2000

loss_fn = nn.MSELoss()

forward_optimizer = torch.optim.Adam(forward_model.parameters(), lr = learning_rate_forward)

inverse_optimizer = torch.optim.Adam(inverse_model.parameters(), lr = learning_rate_inverse)

train_dataloader = DataLoader(train_dataset(x, t), batch_size = batch_size, shuffle = True)

The training loop for the model can be shown as follows:

def train():

forward_model.train()

inverse_model.train()

for epoch in tqdm(range(epochs)):

forward_losstot, inverse_losstot = 0,0

for batch, (x, t) in enumerate(train_dataloader):

x, t = x.to(device), t.to(device)

forward_pred = forward_model(x)

inverse_pred = inverse_model(t)

forward_loss = loss_fn(forward_pred, t)

inverse_loss = loss_fn(inverse_pred, x)

with torch.no_grad():

forward_losstot += forward_loss.item()

inverse_losstot += inverse_loss.item()

forward_loss.backward()

inverse_loss.backward()

forward_optimizer.step()

inverse_optimizer.step()

forward_optimizer.zero_grad()

inverse_optimizer.zero_grad()

tqdm.write(f'epoch: {epoch}, forward loss: {forward_losstot}, inverse loss: {inverse_losstot}')

train()

which gives the following fits:

We thus see that using least squares (which corresponds to a maximum likelihood model under a Gaussian distribution using a least squares fit) gives a very poor fit for the non-Gaussian inverse problem. We thus seek a general framework for modeling conditional distributions. This can be achieved by using mixture models for the conditional distributions , which can approximate probability distributions to arbitrary accuracy with enough mixtures (see [3]). These class of models are known as mixture density networks. For this blog post, we focus mainly on Gaussian Mixture Density Networks for which the subpopulations are Gaussian.

Gaussian Mixture Density Networks

Gaussian mixture density networks use neural networks to model the parameters of a conditional probability that uses a Gaussian mixture model (the components are Gaussian). Here, we assume that the Gaussians are isotropic, ie. the covariance matrices of the Gaussians are a diagonal matrix of the form . That is, where we assume there are classes (this is a hyperparameter). Here, can be interpreted as the class-priors for class , is the mean of the for class and is the standard deviation of the class. Note the dependence on for each of the given parameters, which shows that the density parameters are dependent on the choice of input . Such models are called heteroscedastic because of the multiple variances we model for each subpopulation.

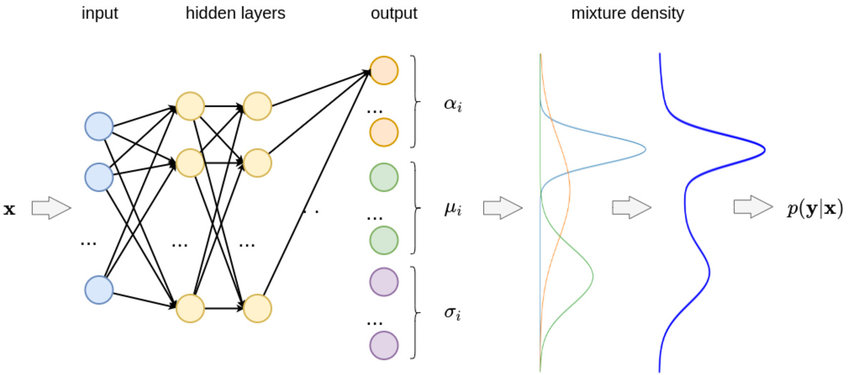

To model the density function using a neural network, we take the outputs of the neural networks to be the various parameters of the density function, namely , and as illustrated below:

If, for example, we assume the density function has components, and the output has components, then the network will have as outputs:

- Pre-activation for class priors : The network has output-unit pre-activations that determine the mixing coefficients . Since the mixing coefficients must satisfy we take the class priors to be given by using the softmax of the pre-unit activations:

- Pre-activation for the variances: The network has output-unit pre-activations . We must have the variances so we take the exponentials of the pre-activations using

- Pre-activation for the means: The networks has pre-activations for the mean where the mean for the th Gaussian can be represented

by using the pre-activations directly (so the output activations are the identity):

This gives a total of parameters.

The learnable parameters of the model can be using the maximum likelihood estimate(MLE) or equivalently minimizing the negative log likelihood: This error function can then be optimized using standard optimizers (SGD, ADAM, etc.).

Predictive Distribution

Once a mixture density function has been trained, we have a model for the conditional density function which can be used to predict the value of the output vector for new inputs. Assuming a squared loss, one can use the calculus of variations to show the conditional mean minimizes the loss for the regression problem. For the mixture model, we have the conditional mean is given by which can be interpreted as a weighted average of the means of the component Gaussians using the class priors. Note that a mixture density network can reproduce the least squares result as a special case by approximating the conditional mean by assuming the distribution is unimodal and there is only one class (ie. ). Similarly, we can calculate Note, however, that for multimodal distributions the conditional mean can often give a poor representation of the data. Going back to the robot kinematics example, note that the average of the two modes (the "elbow up" and "elbow down" solutions) to the inverse problem have an average which is itself not a solution to the problem. The conditional mode, on the other hand, often gives a much better representation of the data and can be approximated by taking the mean of the component with largest prior .

Let's see how this is applied in practice. We return to our toy example of data defined above. Here, we assume there to be component Gaussians which corresponds to output units corresponding to the mean, variance, and prior parameters respectively. The code for the model is shown below:

class MixtureDensityNet(nn.Module):

'''

Two Layer mixture density network with 3 classes.

'''

def __init__(self, n_components):

super().__init__()

self.components = n_components

self.network = nn.Sequential(

nn.Linear(1, 5),

nn.ReLU(),

nn.Linear(5,5),

nn.ReLU(),

nn.Linear(5, self.components*3),

)

def forward(self, x, epsilon=1e-6):

preact = self.network(x)

pi = F.softmax(preact[...,:self.components], -1)

mu = preact[...,self.components:self.components*2]

sigma = torch.exp(preact[...,self.components*2:self.components*3] + epsilon)

return pi, mu, sigma

def mode(self, x):

pi, mu, _ = self.forward(x)

argpi = torch.argmax(pi)

mu = torch.flatten(mu)

return mu[argpi.item()]

We set the hyperparameters as follows:

device = 'cuda' if torch.cuda.is_available() else 'cpu'

mixture_model = MixtureDensityNet(3).to(device)

#hyperparameters

learning_rate = 0.001

batch_size = 300

epochs = 5000

optimizer = torch.optim.Adam(mixture_model.parameters(), lr=learning_rate)

dataloader = DataLoader(train_dataset(x, t), batch_size = batch_size, shuffle = False)

and define a custom loss function for the density network given by above:

class MixtureLoss(nn.Module):

def __init__(self):

super().__init__()

@classmethod

def gaussian(cls, t, mu, sigma):

res = (t.expand_as(mu) - mu) * torch.reciprocal(sigma)

res = -0.5 * (res * res)

return torch.exp(res)*torch.reciprocal(sigma) * (1.0/ np.sqrt(2.0* np.pi))

def forward(self, pred, target):

pi, mu, sigma = pred

result = MixtureLoss.gaussian(target, mu, sigma) * pi

result = torch.sum(result, dim = 1)

result = -torch.log(result)

return torch.mean(result)

loss_fn = MixtureLoss()

The training code is then given as follows:

def train():

mixture_model.train()

for epoch in tqdm(range(epochs)):

losstot = 0

for batch, (x, t) in enumerate(dataloader):

x, t = x.to(device), t.to(device)

pred = mixture_model(t)

loss = loss_fn(pred, x)

with torch.no_grad():

losstot += loss.item()

loss.backward()

optimizer.step()

optimizer.zero_grad()

tqdm.write(f'epoch: {epoch}, loss: {losstot}')

train()

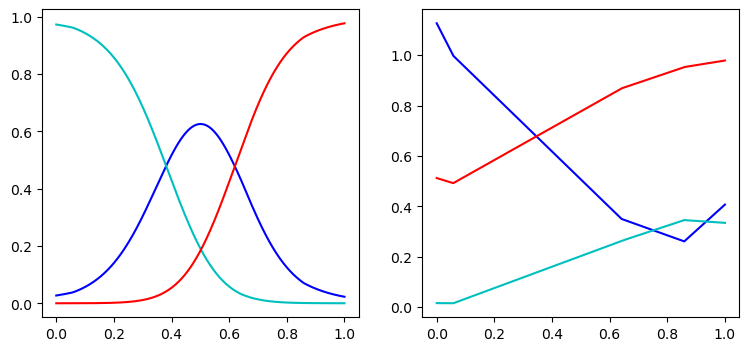

We then plot the mixing coefficients and means as a function of .

We also plot the approximate conditional modes, given by the mean of the most probable component below:

Note the resulting prediction is much more accurate compared to our original unimodal prediction, as desired.

General Case

In this blog post, we focused mainly on Gaussian Mixture Density networks with isotropic Gaussians. This can be extended to general covariance matrices using the Cholesky Factorization (see [2] and [5]). This gives a predictive distribution of The training objective then becomes to minimize the negative log likelihood where is the LogSumExp. An excellent general tensorflow implementation of the general case writen by Axel Brando can be found here.

Full code for plotting and other details for the density network shown in this blog post can be found in my github in the corresponding repository.

References

[1] Brando, A, Mixture Density Networks implementation for distribution and uncertainty estimation, (2016), Github Repository

[2] Bishop, C. M. Mixture density networks. (1994).

[3] Bishop, C. M. Deep Learning: Foundations and Concepts (2024) Springer

[4] A. DasGupta, Asymptotic Theory Of Statistics And Probability. New York: Springer, 200

[5] Williams, P. M. (1996). Using neural networks to model conditional multivariate densities. Neural Comput., 8(4), 843–854